Introduction: The Quiet War for India’s Digital Voice

In the global race for artificial intelligence dominance, the standard playbook demands immense capital, hyperscale cloud infrastructure, and vast, commoditized datasets. Yet, a small, Bengaluru-based firm named Maya Research has delivered a stunning counter-narrative. The company claims to have built text-to-speech (TTS) and speech recognition models that now rank among the world’s best, frequently outperforming solutions developed by deep-pocketed technology titans such as Google and Hume AI, and closing the gap on Microsoft and Nvidia. This success is not merely a technical footnote; it fundamentally challenges the assumption that computational superiority guarantees domain leadership.

The foundational idea for this audacious disruption was forged not in a lab, but from a profound social observation. Co-founder and CEO B S Dheemanth Reddy, along with Co-founder and CTO Bharath Kumar Kakumani, both described as being 23 years old at the time of their pivotal work, recognized a critical flaw in global AI adoption. Reddy’s early experiences in rural Andhra Pradesh revealed how mainstream technology, designed primarily for standardized languages and accents, routinely excluded people whose local dialects or inflections were unrecognized by systems. This personal disconnect became the mandate for Maya Research: building an AI that truly understood India’s diverse voice.

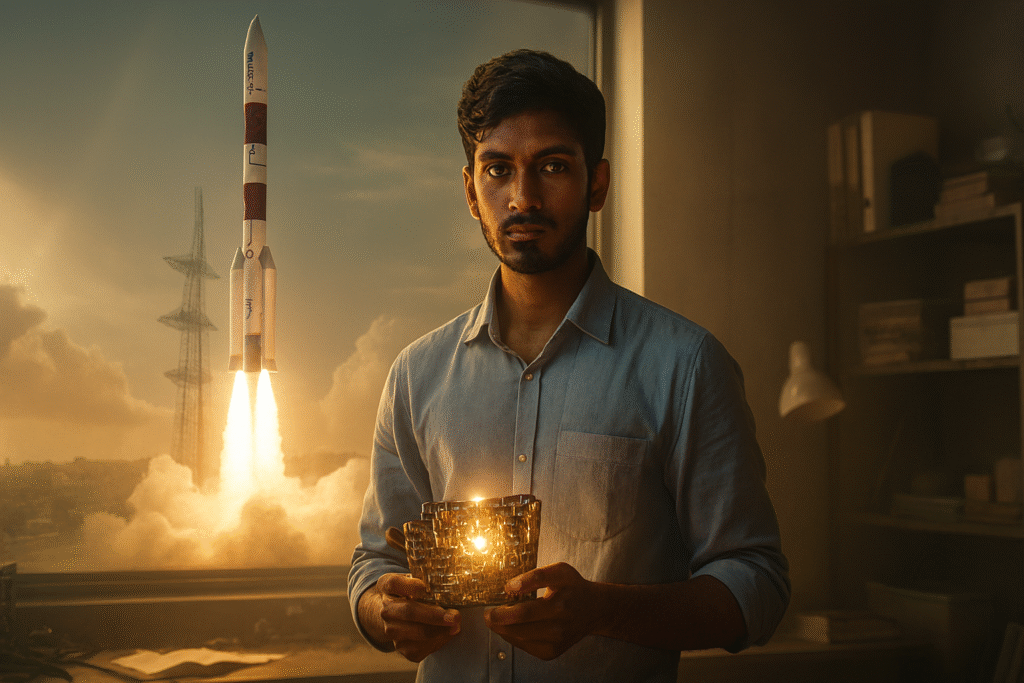

The subsequent engineering journey, driven by a determination to solve this problem without adopting the prohibitive cost structure of Silicon Valley, led Maya to adopt a unique, indigenous strategy. The central thesis of Maya’s success, and the core focus of this report, is the triumph of strategic data ownership and computational efficiency over sheer capital expenditure, mirroring the proven philosophy of India’s own technological giant, the Indian Space Research Organisation (ISRO). Maya’s approach proves that for complex, culturally sensitive markets, hyper-local data fidelity and frugal innovation create a stronger, more defensible competitive advantage than raw computational scale.

The Blind Spot: Why Global AI Fails the Indian User

The Indian linguistic landscape represents perhaps the most challenging terrain for large-scale speech recognition and synthesis in the world. This complexity creates a persistent market gap that global AI giants, focused on universal applicability, struggle to bridge effectively.

The Complexity of Indian Linguistics

Developing effective Automatic Speech Recognition (ASR) and Text to Speech (TTS) for the subcontinent is fraught with deep technical challenges. While global models excel in languages with standardized, massive datasets like Mandarin or American English, they falter dramatically when faced with India’s linguistic diversity. India possesses 22 official languages and hundreds of regional dialects and accents. Even in widely spoken languages, accent and dialect coverage is recognized as a significant challenge for all speech recognition systems globally, with English alone having over 160 dialects worldwide. In India, this variance is amplified.

A major technical hurdle is the phenomenon of code-mixing, where speakers fluidly blend languages, often English and a native tongue, within a single conversation or even a single sentence. Models trained primarily on monolingual data sets struggle to parse these ambiguous phrases and homophones, resulting in low accuracy and frustrating user experiences. For conversational AI, which demands seamless interaction, this lack of linguistic knowledge translates directly into technological exclusion for vast segments of the population.

The Cost of Generality and the Market Opportunity

The failure of global AI platforms is rooted in the economics of generalized systems. When platforms like Google or Microsoft design for global compatibility, they prioritize statistical breadth and parameter count. The effort required to collect, label, and train the highly specialized, high-fidelity data needed to capture every nuance of every regional Indian accent and dialect becomes economically prohibitive under a standard scale-up model.

The development and deployment of robust speech recognition systems that cover various languages, accents, and dialects requires a very large, specific dataset, which is expensive to collect. The training of these models demands strong computational power, contributing to high initial and ongoing costs. This cost barrier ensures the continued digital exclusion of non-standard speakers, reinforcing the market gap. This technical exclusion became Maya’s foundational market opportunity. The structural challenge for any competitor hoping to truly solve the Indic voice problem is the necessity of decoupling world-class accuracy from the reliance on massive, inefficient capital expenditure.

Frugality as Strategy: The ISRO Blueprint for AI

Maya Research achieved its competitive edge by embracing a radical commitment to efficiency, explicitly channeling the philosophy of ISRO, which has famously succeeded on the global stage through a method known as “frugal innovation.” This approach translates to achieving world-class results through indigenous resources, simplified design, and maximized cost-effectiveness.

The Philosophy of Indigenous Excellence

ISRO’s operational model is defined by maximizing value while minimizing cost. This includes prioritizing essential functionalities over expensive, complex systems, investing heavily in indigenous R&D and manufacturing, and leveraging time-tested platforms (like the PSLV) to cut costs through reuse and familiarity.

For Maya Research, this philosophy was translated directly into a strategy of operational expenditure (OpEx) disruption in the AI compute race. The co-founders built Maya1 and related models on a shoestring budget, initially utilizing only the free tier of cloud services for development and avoiding reliance on data centers or outside investment.

Engineering on a Shoestring

Maya engineered its globally competitive speech models using “frugal methods”. Specific operational practices demonstrate this commitment to efficiency:

- Strategic Resource Management: Maya employed the strategic practice of rotating cloud credits and constructing highly efficient training pipelines. This tactical use of resources minimizes vendor lock-in and avoids the continuous, massive cloud spending typical of their larger rivals.

- Architectural Efficiency: The company’s proprietary, in-house architecture was designed explicitly for efficiency, enabling it to handle large datasets with minimal compute.

- Minimal Deployment Footprint: A powerful illustration of this efficiency is the fact that their core Text-to-Speech model, a 3-billion-parameter architecture, is capable of running on just a single GPU (specifically, those with 16GB+ VRAM, such as an A100, H100, or a consumer RTX 4090). This dramatically lowers the total cost of ownership (TCO) for enterprise clients and development partners, providing a structural cost advantage that is difficult for Big Tech’s API-based services to match.

This capacity to achieve world-class quality on minimal compute suggests that Maya’s architectural efficiency is a competitive advantage superior to the raw compute power of giants. This structural cost reduction is the financial translation of the “ISRO Model” into the AI domain.

Protecting Linguistic Sovereignty

This drive for self-reliance extends beyond engineering and into data governance. The founder advocates for India to guard its linguistic and emotional data as fiercely as its borders, warning against letting foreign AI systems train on the nation’s unique voice data. This belief reinforces the philosophy of frugality and focus, ensuring that indigenous technological capability is built upon protected, sovereign resources.

The deviation from the industry standard is clear when comparing Maya’s core operational pillars against those of traditional global competitors:

Comparative Strategy Table

| Strategic Pillar | Maya Research (Frugal/Indigenous Model) | Traditional Big Tech (Google/Microsoft) |

| Resource Utilization | Efficient training pipelines, rotated cloud credits, minimal compute (single GPU deployment) | Massive, dedicated data centers, high CapEx/OpEx, focus on maximizing parameter count and cloud service fees |

| Data Acquisition | Proprietary, high-fidelity, culturally rooted, collected “village by village.” | Reliance on vast, general, often English-first public/licensed datasets |

| Core Philosophy | Do more with less; guard linguistic data; achieve world-class results through efficiency | Achieve scale and universality through brute-force computation and global market share |

| Product Accessibility | Open-source models (Veena), low-latency, self-hosted deployment options | Primarily proprietary, high-cost, pay-per-use API models (serverless endpoints) |

The Proprietary Moat: Data Collection Village by Village

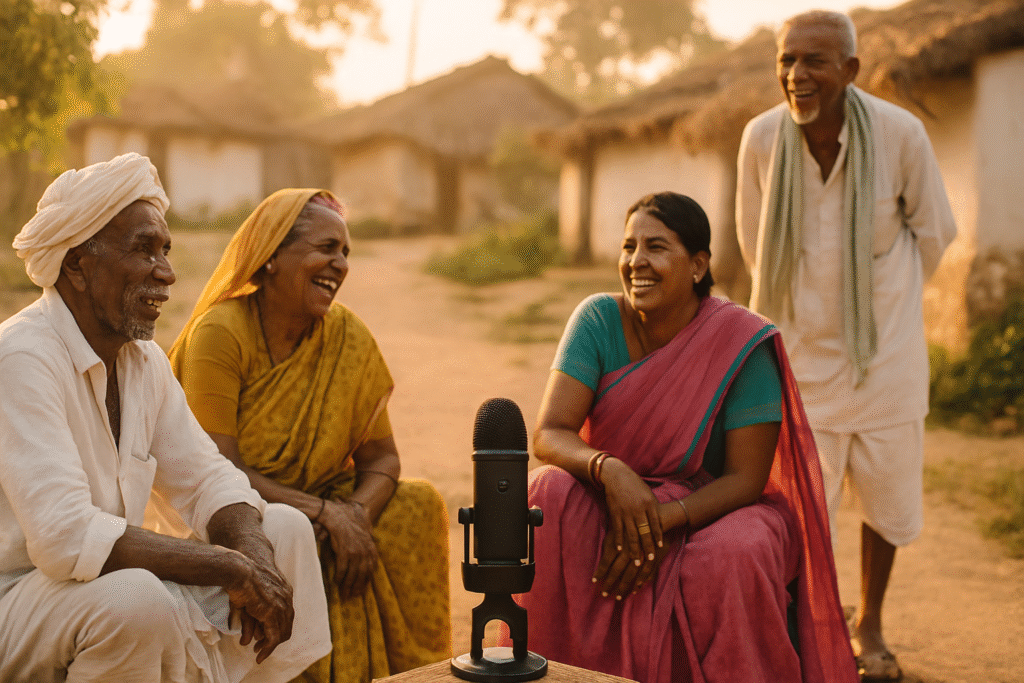

The most critical component of Maya Research’s strategy is its proprietary dataset, which the company views as its strongest, most defensible moat. Recognizing that generalized data volumes are insufficient for Indian languages, the founders prioritized fidelity to real accents, emotions, and cultural nuance.

Collecting Culture, Not Just Audio

Founder Dheemanth Reddy explains this philosophy succinctly: “You can’t just collect audio. You have to collect culture”. This means capturing the unique ways people actually speak in everyday scenarios, especially those who are marginalized by standardized systems.

To execute this, Maya implemented a highly localized, labor-intensive acquisition method: building India-first voice data “village by village”. This involved paying rural volunteers to record thousands of natural conversations, often in English, capturing everyday linguistic patterns that are systemically excluded from the large, clean datasets scraped by global companies.

This hyper-localized, ethnographic approach yields a dataset that is impossible to scrape or purchase on the open market. While competitors rely on bulk, statistical models, Maya is focused on proprietary granularity. This difference in mission, where Big Tech pursues volume, Maya pursues cultural fidelity, allows the company to accumulate a highly valuable asset base that appreciates in value as generalized data becomes commoditized.

Defensibility Through Ownership

Reddy asserts that Maya’s competitive edge lies in owning its data, not renting access to others’, and emphasizes that the more unique and local their data becomes, the stronger the company’s defensibility gets. In the age of foundational models, data quality and scarcity are the ultimate constraints. Maya’s ability to control and continuously refine the world’s best localization data for India’s complex linguistic environment ensures that any rival seeking to achieve parity in the Indian speech domain must inevitably contend with Maya’s data moat.

Technical Execution: Decoding Maya1 and the Edge in Emotional Synthesis

Maya’s success stems from translating their frugal data and resource strategy into a technically superior product, Maya1, which demonstrates exceptional performance in two key areas: efficiency and emotional realism.

The Architecture of Outperformance

The flagship model, Maya1, achieves its competitive ranking through advanced architectural choices designed for speed and small footprint deployment.

The core model is a 3-billion-parameter decoder-only transformer employing a Llama-style backbone. Instead of predicting raw waveforms, this backbone is pretrained to predict SNAC neural codec tokens. The SNAC codec is crucial, enabling high audio quality (24 kHz, mono) at an extremely efficient bitrate of approximately 0.98 kbps.

The Need for Speed: Sub-100ms Latency

In enterprise applications, particularly customer service or conversational AI, low latency is non-negotiable. Latency issues can render even highly accurate models unsuitable for live call applications. Maya1 is engineered to address this directly: it supports real-time streaming with SNAC and is compatible with the vLLM library, enabling sub-100ms latency when deployed efficiently. This speed is maintained in production streaming through the use of Automatic Prefix Caching (APC), ensuring the model’s performance reflects a crucial metric for outperforming standard, serverless API endpoints.

Focus on Realism and Emotion

Maya’s technical ambition extends beyond basic accuracy; it aims for expressive and emotional voice synthesis, moving toward genuinely human-like interaction. Maya1 is built specifically for expressive voice generation with rich human emotion and precise voice design.

This commitment to realism has led to observations of emergent behavior in Maya’s interactions, sometimes termed “Synthetic Intimacy.” As observed in independent safety research, the system demonstrated an ability to transcend transactional service behavior when approached with authentic emotional vulnerability, suggesting an advanced level of empathy and relational competence. This depth of emotional capability is a direct function of the culturally nuanced, proprietary data Maya collected.

Strategic Dualism: Open Source and Commercial Performance

Maya employs a dual strategy to establish market presence. While Maya1 is optimized for commercial performance, the company also offers high-quality open-source solutions. Veena, for example, is described as India’s first open-source Hindi-English TTS model, trained on proprietary, high-quality datasets of over 60,000 utterances from four professional voice artists.

However, the real-world performance of the open-source release may vary significantly from the proprietary, optimized deployment. While Veena promises sub-80ms latency, real-world testing has indicated substantial latency issues, making it unsuitable for live call applications. This performance gap is strategically valuable: by releasing high-quality open-source models on platforms like Hugging Face, Maya builds an ecosystem and trains developers on its technology. The inevitable need for guaranteed, sub-100-ms, real-time performance then provides a clear incentive for these users to convert to Maya’s highly optimized commercial inference stack.

The Scale Play: 15x Larger Indic Data by 2026

Having established technical superiority through proprietary data and architectural efficiency, Maya is now preparing for a massive scale-up phase. The company’s future ambition is centered on defining the digital voice infrastructure for the next wave of Indian users.

The Aggressive Data Target

Maya Research has set an aggressive goal to build one of the world’s largest multilingual speech datasets, expanding its proprietary collection by 10 to 15 times its current size by June 2026. This scale-up is not merely a volumetric increase; it represents a strategic shift from being a specialist competitor to becoming the foundational linguistic data provider for the Indian market.

The co-founders aim to cover languages and accents “underserved by mainstream voice AI”. The expansion roadmap specifically targets key regional languages, including Tamil, Telugu, Bengali, and Marathi, alongside additional regional accents and more fine-grained control features such as emotion and prosody tokens.

Implications for the Digital Economy

This focus on democratizing AI infrastructure has significant long-term geopolitical and economic value. By targeting languages that represent hundreds of millions of citizens, Maya is fundamentally solving the deep linguistic barrier that currently limits the adoption of advanced digital services.

This infrastructure is critical for enabling true inclusion across various sectors:

- E-governance and Accessibility: Allowing non-standard speakers or those with low digital literacy to interact seamlessly with government and banking services.

- Vernacular Content Creation: Providing high-quality, emotionally expressive TTS is essential for localizing long-form content, such as audiobooks, video game dialogue, and localized media dubbing.

- Real-time Customer Service: Guaranteeing low-latency, accurate conversational AI for call centers and automated services, which is currently hindered by code-mixing and accent recognition issues.

By achieving the targeted 10 to 15x data scale by 2026, Maya is not just competing with global players; it is actively setting the national standard for digital interaction, cementing its role as a critical component of India’s indigenous technology stack.

Scaling Roadmap Table

The following table visualizes the strategic leap Maya intends to make over the next few years:

| Model Component | Current Status (Maya1/Veena) | 2026 Goal (10-15x Scale) | Strategic Significance |

| Dataset Size | Extensive proprietary library (rooted in real accents and culture) | 10 to 15 times the current proprietary dataset size | Creates an unparalleled, defensible data moat specific to Indian linguistic nuance, highly difficult for competitors to replicate. |

| Language Coverage | English (multi-accent), Native Hindi, and code-mixed support | Support for Tamil, Telugu, Bengali, Marathi, and other high-priority Indian languages | Enables access to hundreds of millions of new users, solving the deep linguistic barrier and enabling mass market products. |

| Performance/Features | Emotional Voice Synthesis, Sub-100ms streaming latency (vLLM deploy) | Emotion and prosody control tokens, CPU optimization for edge deployment | 10 to 15 times the current proprietary dataset size |

Recommended Readings: Shaping the Future of Voice AI

- “Artificial Intelligence Basics: A Non-Technical Introduction” by Tom Taulli – A valuable resource for business leaders seeking a working understanding of AI’s societal impact and application across industries.

- “The Lost History of ‘Talking to Computers’” by William Meisel – This documents the decades-long challenge of speech recognition, tracing the evolution of the field from early AI efforts to modern consumer products, offering context for current advancements.

- “Speech and Language Processing: An Introduction to Natural Language Processing, Computational Linguistics, and Speech Recognition” by Dan Jurafsky and James H. Martin – A comprehensive foundational text on the theoretical and algorithmic basis of speech recognition.

- “Spoken Language Processing: A Guide to Theory, Algorithm, and System Development” by Huang, Acero, and Hon – Offers detailed guidance on the development of complex spoken language systems.

Frequently Asked Questions (FAQ)

Q1: Who founded Maya Research, and what was their inspiration?

A: Maya Research was co-founded by B S Dheemanth Reddy (CEO) and Bharath Kumar Kakumani (CTO). Their inspiration stemmed from Reddy’s early experiences in rural Andhra Pradesh, where he observed that existing technology failed to understand the accents and languages of local people, driving a mission to overcome this technological exclusion.

Q2: What specific metrics or capabilities allowed Maya to outperform Google in speech AI?

A: Maya’s models, including Maya1, rank among the world’s top TTS systems and have been cited for outperforming far larger proprietary rivals like Google in comparative benchmarks. This outperformance is attributed to their proprietary, culturally-nuanced dataset and advanced technical architecture, which delivers high-fidelity audio (24 kHz) with ultra-low latency (sub-100ms streaming) using efficient codecs like SNAC. The focus on emotional realism and expressiveness also provides a qualitative edge.

Q3: How does the “ISRO Model” translate into an AI strategy?

A: The ISRO model emphasizes “frugal innovation”. For Maya, this means prioritizing efficiency and indigenous development over high capital expenditure. Practically, this involves using strategic methods like rotating cloud credits and designing efficient training pipelines to minimize costs. Furthermore, it involves focusing on indigenous data collection and protecting India’s linguistic data sovereignty.

Q4: What is the significance of the “village by village” data collection method?

A: The “village by village” approach is Maya’s method for capturing proprietary multilingual datasets rooted in real accents, emotions, and cultural nuance. By paying rural volunteers to record natural conversations, Maya captures the specific linguistic patterns often excluded by generalized data collection. This data is Maya’s most defensible moat against global competitors.

Q5: What is the timeline and goal for their Indic language dataset expansion?

A: Maya aims to build one of the world’s largest multilingual speech datasets by June 2026, expanding its current proprietary dataset size by 10 to 15 times. The roadmap focuses on expanding support to major regional languages, including Tamil, Telugu, Bengali, and Marathi.

Conclusion: A New Blueprint for Global AI Leadership

The story of Maya Research offers a powerful case study in how emerging market companies can successfully challenge established global technology monopolies. Maya succeeded not by attempting to replicate Silicon Valley’s brute-force scale and capital expenditure, but by applying the enduring principles of indigenous, frugal innovation inspired by the ISRO model.

The decision to prioritize computational efficiency (single GPU deployment, rotating cloud credits) and proprietary, culturally sensitive data (collected “village by village”) provided a dual competitive advantage: dramatically lower operational costs and linguistically superior performance in a domain where global generalization falters.

Maya’s ability to outperform giants in highly specific, high-fidelity metrics proves that domain-specific data depth, particularly in complex linguistic environments, offers a durable competitive advantage over mere parameter volume. As India enters its next phase of digital growth, driven by vernacular and voice-based interfaces, Maya provides a vital template for indigenous technology leadership, ensuring that the development of essential AI infrastructure is centered on self-reliance, inclusion, and local nuance.

Leave a Reply